Test YOUR Business in Minutes

Create your account and launch your AI chatbot in minutes. Fully customizable, no coding required - start engaging your customers instantly!

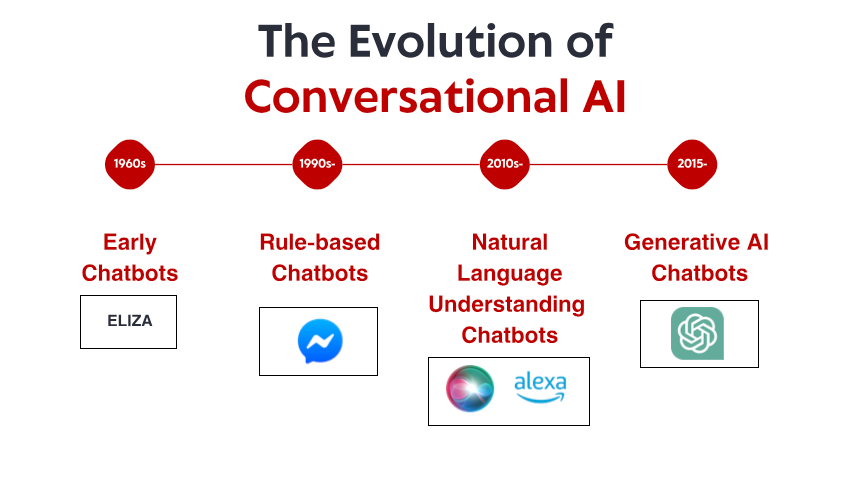

The Humble Beginnings: Early Rule-Based Systems

What made ELIZA remarkable wasn't its technical sophistication – by today's standards, the program was incredibly basic. Rather, it was the profound effect it had on users. Despite knowing they were talking to a computer program with no actual understanding, many people formed emotional connections with ELIZA, sharing deeply personal thoughts and feelings. This phenomenon, which Weizenbaum himself found disturbing, revealed something fundamental about human psychology and our willingness to anthropomorphize even the simplest conversational interfaces.

Throughout the 1970s and 1980s, rule-based chatbots followed ELIZA's template with incremental improvements. Programs like PARRY (simulating a paranoid schizophrenic) and RACTER (which "authored" a book called "The Policeman's Beard is Half Constructed") remained firmly within the rule-based paradigm – using predefined patterns, keyword matching, and templated responses.

These early systems had severe limitations. They couldn't actually understand language, learn from interactions, or adapt to unexpected inputs. Their knowledge was limited to whatever rules their programmers had explicitly defined. When users inevitably strayed outside these boundaries, the illusion of intelligence quickly shattered, revealing the mechanical nature underneath. Despite these constraints, these pioneering systems established the foundation upon which all future conversational AI would build.

The Knowledge Revolution: Expert Systems and Structured Information

Expert systems like MYCIN (which diagnosed bacterial infections) and DENDRAL (which identified chemical compounds) organized information in structured knowledge bases and used inference engines to draw conclusions. When applied to conversational interfaces, this approach allowed chatbots to move beyond simple pattern matching toward something resembling reasoning – at least within narrow domains.

Companies began implementing practical applications like automated customer service systems using this technology. These systems typically used decision trees and menu-based interactions rather than free-form conversation, but they represented early attempts to automate interactions that previously required human intervention.

The limitations remained significant. These systems were brittle, unable to handle unexpected inputs gracefully. They required enormous efforts from knowledge engineers to manually encode information and rules. And perhaps most importantly, they still couldn't truly understand natural language in its full complexity and ambiguity.

Nevertheless, this era established important concepts that would later become crucial for modern conversational AI: structured knowledge representation, logical inference, and domain specialization. The stage was being set for a paradigm shift, though the technology wasn't quite there yet.

Natural Language Understanding: The Computational Linguistics Breakthrough

This shift was enabled by several factors: increasing computational power, better algorithms, and crucially, the availability of large text corpora that could be analyzed to identify linguistic patterns. Systems began incorporating techniques like:

Part-of-speech tagging: Identifying whether words were functioning as nouns, verbs, adjectives, etc.

Named entity recognition: Detecting and classifying proper names (people, organizations, locations).

Sentiment analysis: Determining the emotional tone of text.

Parsing: Analyzing sentence structure to identify grammatical relationships between words.

One notable breakthrough came with IBM's Watson, which famously defeated human champions on the quiz show Jeopardy! in 2011. While not strictly a conversational system, Watson demonstrated unprecedented abilities to understand natural language questions, search through vast knowledge repositories, and formulate answers – capabilities that would prove essential for the next generation of chatbots.

Commercial applications soon followed. Apple's Siri launched in 2011, bringing conversational interfaces to mainstream consumers. While limited by today's standards, Siri represented a significant advancement in making AI assistants accessible to everyday users. Microsoft's Cortana, Google's Assistant, and Amazon's Alexa would follow, each pushing forward the state of the art in consumer-facing conversational AI.

Despite these advances, systems from this era still struggled with context, common sense reasoning, and generating truly natural-sounding responses. They were more sophisticated than their rule-based ancestors but remained fundamentally limited in their understanding of language and the world.

Machine Learning and the Data-Driven Approach

This era saw the rise of intent classification and entity extraction as core components of conversational architecture. When a user made a request, the system would:

Classify the overall intent (e.g., booking a flight, checking weather, playing music)

Extract relevant entities (e.g., locations, dates, song titles)

Map these to specific actions or responses

Facebook's (now Meta's) launch of its Messenger Platform in 2016 allowed developers to create chatbots that could reach millions of users, sparking a wave of commercial interest. Many businesses rushed to implement chatbots, though results were mixed. Early commercial implementations often frustrated users with limited understanding and rigid conversation flows.

The technical architecture of conversational systems also evolved during this period. The typical approach involved a pipeline of specialized components:

Automatic Speech Recognition (for voice interfaces)

Natural Language Understanding

Dialog Management

Natural Language Generation

Text-to-Speech (for voice interfaces)

Each component could be optimized separately, allowing for incremental improvements. However, these pipeline architectures sometimes suffered from error propagation – mistakes in early stages would cascade through the system.

While machine learning significantly improved capabilities, systems still struggled with maintaining context over long conversations, understanding implicit information, and generating truly diverse and natural responses. The next breakthrough would require a more radical approach.

The Transformer Revolution: Neural Language Models

This innovation enabled the development of increasingly powerful language models. In 2018, Google introduced BERT (Bidirectional Encoder Representations from Transformers), which dramatically improved performance on various language understanding tasks. In 2019, OpenAI released GPT-2, demonstrating unprecedented abilities in generating coherent, contextually relevant text.

The most dramatic leap came in 2020 with GPT-3, scaling up to 175 billion parameters (compared to GPT-2's 1.5 billion). This massive increase in scale, combined with architectural refinements, produced qualitatively different capabilities. GPT-3 could generate remarkably human-like text, understand context across thousands of words, and even perform tasks it wasn't explicitly trained on.

For conversational AI, these advances translated to chatbots that could:

Maintain coherent conversations over many turns

Understand nuanced queries without explicit training

Generate diverse, contextually appropriate responses

Adapt their tone and style to match the user

Handle ambiguity and clarify when necessary

The release of ChatGPT in late 2022 brought these capabilities to the mainstream, attracting over a million users within days of its launch. Suddenly, the general public had access to conversational AI that seemed qualitatively different from anything that came before – more flexible, more knowledgeable, and more natural in its interactions.

Commercial implementations quickly followed, with companies incorporating large language models into their customer service platforms, content creation tools, and productivity applications. The rapid adoption reflected both the technological leap and the intuitive interface these models provided – conversation is, after all, the most natural way for humans to communicate.

Test YOUR Business in Minutes

Create your account and launch your AI chatbot in minutes. Fully customizable, no coding required - start engaging your customers instantly!

Multimodal Capabilities: Beyond Text-Only Conversations

Vision-language models like DALL-E, Midjourney, and Stable Diffusion demonstrated the ability to generate images from textual descriptions, while models like GPT-4 with vision capabilities could analyze images and discuss them intelligently. This opened new possibilities for conversational interfaces:

Customer service bots that can analyze photos of damaged products

Shopping assistants that can identify items from images and find similar products

Educational tools that can explain diagrams and visual concepts

Accessibility features that can describe images for visually impaired users

Voice capabilities have also advanced dramatically. Early speech interfaces like IVR (Interactive Voice Response) systems were notoriously frustrating, limited to rigid commands and menu structures. Modern voice assistants can understand natural speech patterns, account for different accents and speech impediments, and respond with increasingly natural-sounding synthesized voices.

The fusion of these capabilities is creating truly multimodal conversational AI that can seamlessly switch between different communication modes based on context and user needs. A user might start with a text question about fixing their printer, send a photo of the error message, receive a diagram highlighting relevant buttons, and then switch to voice instructions while their hands are busy with the repair.

This multimodal approach represents not just a technical advancement but a fundamental shift toward more natural human-computer interaction – meeting users in whatever communication mode works best for their current context and needs.

Retrieval-Augmented Generation: Grounding AI in Facts

Retrieval-Augmented Generation (RAG) emerged as a solution to these challenges. Rather than relying solely on parameters learned during training, RAG systems combine the generative abilities of language models with retrieval mechanisms that can access external knowledge sources.

The typical RAG architecture works like this:

The system receives a user query

It searches relevant knowledge bases for information pertinent to the query

It feeds both the query and the retrieved information to the language model

The model generates a response grounded in the retrieved facts

This approach offers several advantages:

More accurate, factual responses by grounding generation in verified information

The ability to access up-to-date information beyond the model's training cutoff

Specialized knowledge from domain-specific sources like company documentation

Transparency and attribution by citing the sources of information

For businesses implementing conversational AI, RAG has proven particularly valuable for customer service applications. A banking chatbot, for instance, can access the latest policy documents, account information, and transaction records to provide accurate, personalized responses that would be impossible with a standalone language model.

The evolution of RAG systems continues with improvements in retrieval accuracy, more sophisticated methods for integrating retrieved information with generated text, and better mechanisms for evaluating the reliability of different information sources.

The Human-AI Collaboration Model: Finding the Right Balance

The most successful implementations today follow a collaborative model where:

The AI handles routine, repetitive queries that don't require human judgment

Humans focus on complex cases requiring empathy, ethical reasoning, or creative problem-solving

The system knows its limitations and smoothly escalates to human agents when appropriate

The transition between AI and human support is seamless for the user

Human agents have full context of the conversation history with the AI

AI continues to learn from human interventions, gradually expanding its capabilities

This approach recognizes that conversational AI shouldn't aim to completely replace human interaction, but rather to complement it – handling the high-volume, straightforward queries that consume human agents' time while ensuring complex issues reach the right human expertise.

The implementation of this model varies across industries. In healthcare, AI chatbots might handle appointment scheduling and basic symptom screening while ensuring medical advice comes from qualified professionals. In legal services, AI might help with document preparation and research while leaving interpretation and strategy to attorneys. In customer service, AI can resolve common issues while routing complex problems to specialized agents.

As AI capabilities continue to advance, the line between what requires human involvement and what can be automated will shift, but the fundamental principle remains: effective conversational AI should enhance human capabilities rather than simply replace them.

The Future Landscape: Where Conversational AI Is Headed

Personalization at scale: Future systems will increasingly tailor their responses not just to the immediate context but to each user's communication style, preferences, knowledge level, and relationship history. This personalization will make interactions feel more natural and relevant, though it raises important questions about privacy and data usage.

Emotional intelligence: While today's systems can detect basic sentiment, future conversational AI will develop more sophisticated emotional intelligence – recognizing subtle emotional states, responding appropriately to distress or frustration, and adapting its tone and approach accordingly. This capability will be particularly valuable in customer service, healthcare, and education applications.

Proactive assistance: Rather than waiting for explicit queries, next-generation conversational systems will anticipate needs based on context, user history, and environmental signals. A system might notice you're scheduling several meetings in an unfamiliar city and proactively offer transportation options or weather forecasts.

Seamless multimodal integration: Future systems will move beyond simply supporting different modalities to seamlessly integrating them. A conversation might flow naturally between text, voice, images, and interactive elements, choosing the right modality for each piece of information without requiring explicit user selection.

Specialized domain experts: While general-purpose assistants will continue to improve, we'll also see the rise of highly specialized conversational AI with deep expertise in specific domains – legal assistants that understand case law and precedent, medical systems with comprehensive knowledge of drug interactions and treatment protocols, or financial advisors versed in tax codes and investment strategies.

Truly continuous learning: Future systems will move beyond periodic retraining to continuous learning from interactions, becoming more helpful and personalized over time while maintaining appropriate privacy safeguards.

Despite these exciting possibilities, challenges remain. Privacy concerns, bias mitigation, appropriate transparency, and establishing the right level of human oversight are ongoing issues that will shape both the technology and its regulation. The most successful implementations will be those that address these challenges thoughtfully while delivering genuine value to users.

What's clear is that conversational AI has moved from a niche technology to a mainstream interface paradigm that will increasingly mediate our interactions with digital systems. The evolutionary path from ELIZA's simple pattern matching to today's sophisticated language models represents one of the most significant advances in human-computer interaction – and the journey is far from over.